The 5 Most Important Recent Developments in AI

By Dovydas Ceiltuka

From solving maths and science problems to translating with astonishing accuracy between hundreds of languages – not to mention generating images and videos based on a natural language prompt – AI is making strides pretty much across the board.

In this article, I’ll briefly discuss some of the most recent (and the most exciting!) developments that you should know about, but perhaps don’t already.

So, without further ado, let’s dive in!

1. Minerva (Google)

Released on 1 August 2022, Minerva is a language model capable of not only solving maths and science problems submitted in the form of natural language, but also of providing its reasoning behind the answer.

So far, Google has built three versions of the model, getting bigger with each iteration. And, needless to say, “the bigger, the better” remains very much true when it comes to language models.

Minerva was trained on 1.2M papers submitted to arXiv (58GB) and numerous web pages containing maths (TeX, AsciiMath, MathML) (60GB), which is quite a massive dataset.

Now, you might be wondering, “Wait, but wasn’t GPT-3 already great at maths?’. The answer to that is, actually, no. All language models are quite terrible at maths problems. Minerva, on the other hand, is capable of solving high school-level maths problems without much difficulty.

As things stand today, the model’s performance on machine learning problems is low, but, in addition to being good at algebra, it shows problem-solving potential in physics, number theory, pre-calculus, geometry, biology, electrical engineering, chemistry, and astronomy.

2. No Language Left Behind (Meta AI)

I would say that NLLB is not so much a model, as a system. One of its key advantages over most, if not all, currently existing solutions is the massive number of languages it’s able to translate between. Specifically, that number is 200, which amounts to 40,000 language combinations in total!

If you know English and/or either of the 3-4 other major languages, this probably won’t be terribly exciting news for you. However, if you only speak one language that also happens to be not a very popular one, chances are you’re sorely underserved. With systems like NLLB, though, things are likely to change fairly soon.

Both the dataset and the model itself are open-source, which is a huge advantage and a rarity. The dataset was built partially automatically and partially with the aid of human translators, which is a gargantuan undertaking – and the reason why I think “system” is a more apt descriptor in this case than “model”.

In terms of performance, the NLLB outperforms Google Translate in some respects and is also better on average. And make no mistake, this is quite the feat, because hardly any competitor today can do better than the almighty GT. Let alone do better and with tons of more languages!

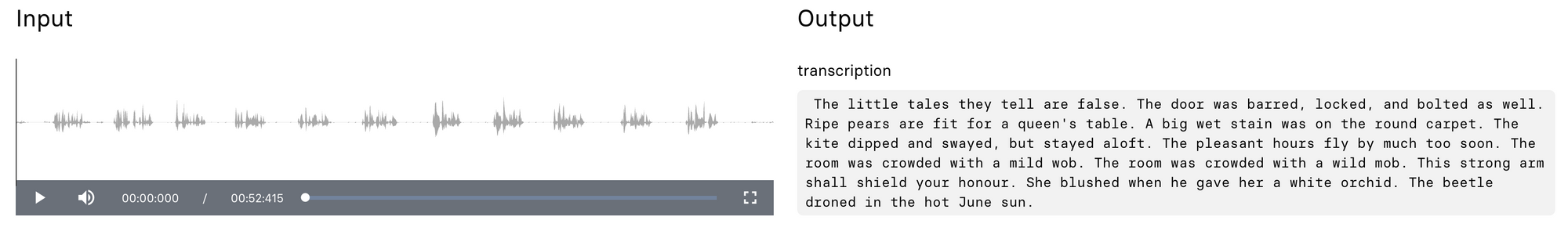

3. Whisper (Open AI)

Released just over a month ago, Whisper is an unsupervised speech-recognition model trained on 680,000 hours of multilingual and multitask supervision. What makes it so impressive is that it’s competitive with existing, state-of-the-art labelled (supervised) datasets.

Another important thing about Whisper is its robustness. What does that mean? It means that it does well even on “dirty” (noisy) datasets.

In a paper called Robust Speech Recognition via Large-Scale Weak Supervision, Open AI’s team has shown that Whisper vastly outperforms supervised models that specialise in LibriSpeech performance (a famously competitive benchmark in ASR) on noisy datasets.

The key takeaway here is, again – the bigger you can get your model to be, the better it will perform. In this case, Whisper does remarkably well even when dealing with noisy recordings, such as people talking over the phone or at home.

Overall, Whisper’s performance is approaching human-level robustness and accuracy – at least when it comes to English speech recognition.

4. Stable Diffusion (Stability AI)

Okay, this one is not only important, but also super fun. Stable Diffusion, developed by Stability AI, is a text-to-image synthesis model trained and fine-tuned on a number of open image datasets.

The model is open source, which means you can download it for free and generate any number of 512x512 images using consumer hardware. If your system has at least 10GB of RAM, the process will take no longer than a few seconds.

Alternatively, you can play around with it on Hugging Face here without having to worry about whether your rig is fast enough to get the job done quickly.

The way Stable Diffusion works, from the user’s point of view, is very straightforward – you type in a short description of the image you want and click “Generate Image”. That’s it!

To make things more interesting, you can also indicate your preferred style. For instance, rather than typing, “A pig riding a bicycle”, you can do, “A pig riding a bicycle in the style of Monet”. The only limit here is your imagination and tolerance level for trippy imagery.

In contrast to many other advanced models, with Stable Diffusion you don’t need any sophisticated infrastructure or thousands of GPUs to run. It works beautifully even on a simple mid-range laptop or PC.

5. Make-a-Video (Meta AI)

The last thing I want to briefly touch on in this article is Meta AI’s Make-a-Video. The idea here is largely the same as that behind Stable Diffusion – turning text prompts into video footage. Only in this case, you’d need a multi-GPU system to run it.

Make-a-Video was first trained on 2.3B text-image pairs and then fine-tuned using 20M internet videos. Meta AI claims it’s competitive with currently existing, fully supervised models, but in a zero-shot transfer setting and no fine-tuning (the latter isn’t quite true, but close enough).

Taking a look at the accompanying paper quickly reveals just how difficult it was to make something like this, especially considering the model’s output, which is highly detailed and realistic.

Obviously, when I say “video”, I don’t mean a clip that’s a minute long or even longer. We’re talking maybe a few seconds of footage here. Still, the result is quite amazing.

You can even use a series of images that you already have, and Make-a-Video will fill in the gaps for you, creating a short clip.

Conclusion

And that’ll do it for the review this time. With AI developing in leaps and bounds, many new discoveries and solutions are sure to come in the coming months and years. Who knows, maybe something new and amazing will have already dropped by the time you read this article! In any case, you can expect more updates from me in the near future.