Web Scraping 101: Extracting Data from Websites Using Python

Author: Linda Oranya

There is really no data science without data! In fact, if you want to do any kind of science at all, you first need to get data. The good news is that there is a lot of data out there that can be accessed and used. But the real question is how do we get our hands on this data that’s lying around everywhere? Well, there are many ways we can do this, and today I am going to introduce one of those ways to you — web scraping!

So what exactly is web scraping? Just like the name implies, it is the act of gathering or extracting data from the web.

There are numerous websites out there, and can you imagine the wealth of data you can get from these websites, and the wealth of insights you can get from it? Let me give you an idea of the kinds of nuggets you can find — you can get insights on customers, from what people feel about products and how they perceive an action, all the way through to knowing what their preferences are! And what’s more, you can use all of these details to build a model that can predict future prices or whatever it is you need to achieve.

Yes, all of that just by scraping the web! But, it is important to note that not all websites allow web scraping, so you’ll have to check in advance before you try! Now let’s get into it.

Web scraping is really very tailored to the website you want to scrape. What I mean here is that you need to understand the structure of the website to successfully scrape it. But not to worry, once you get the drift of one website, you basically use the same technique for others. For the purpose of this article, I will be scraping the Etsy website. The Etsy website contains various keywords you can scrape for, but it would be good if the site was able to allow users to put in the keyword they want to scrape for, as well as the number of pages they want to scrape.

To scrape the web successfully, you need a good understanding of HTML structures. But don't worry if you don’t, because you can watch this detailed video on HTML. For those of you with a good understanding, let’s move on.

Before scraping any website, it is important to check if the website allows scraping; this will save you a lot of stress and will also avoid any kind of blocking at all. You can check this by appending “/robots.txt” to the end of the URL. This will show you details on allowed activities on the website. It is also good to read the Terms & Conditions on the website and the copyright as well.

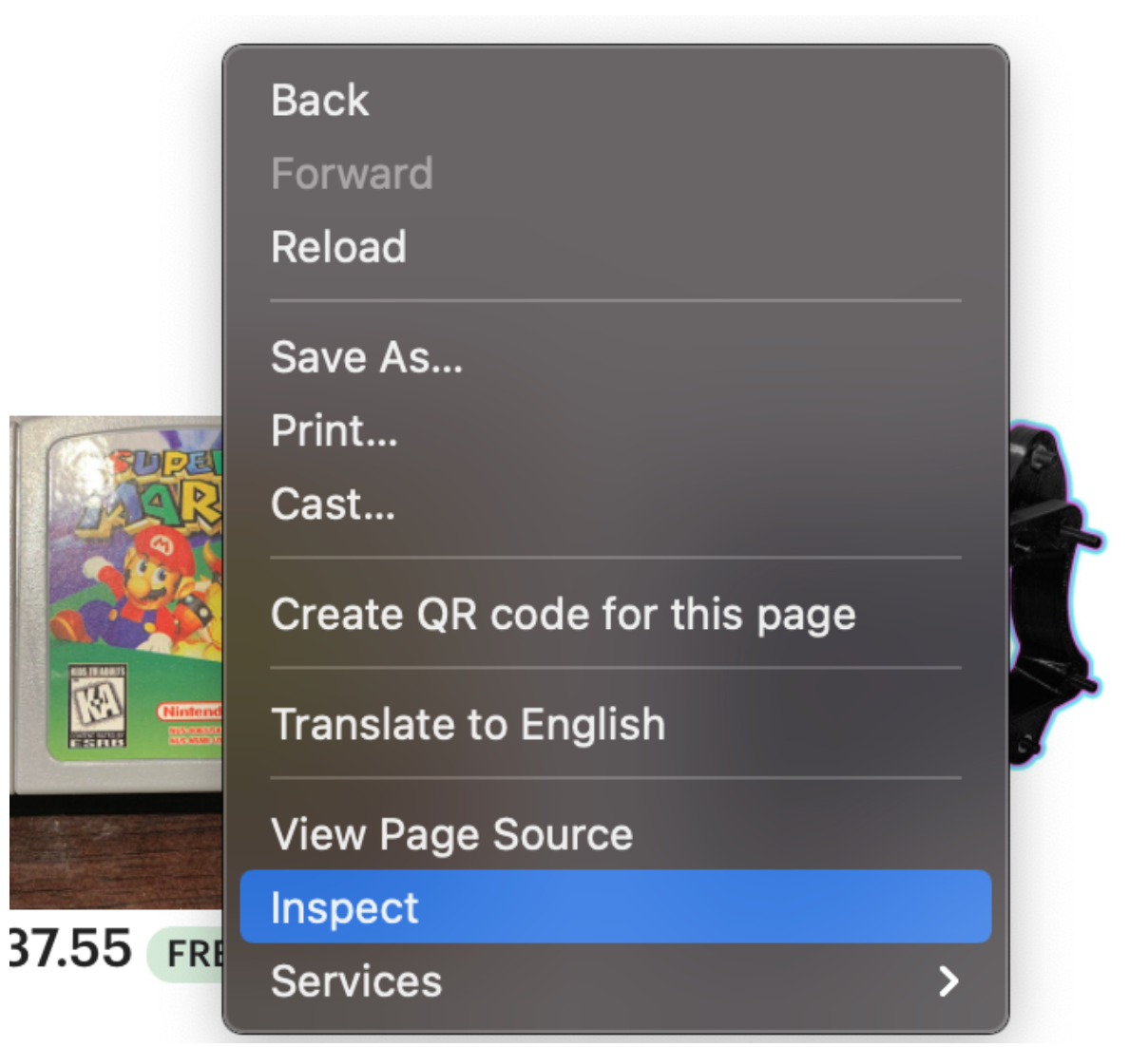

Once that is done, we go ahead to inspect the website, which enables you to see how the contents of the website are represented in HTML & CSS.

Let’s go ahead and inspect the Etsy website with these steps:

- Right click any element on the page and select the “inspect” option.

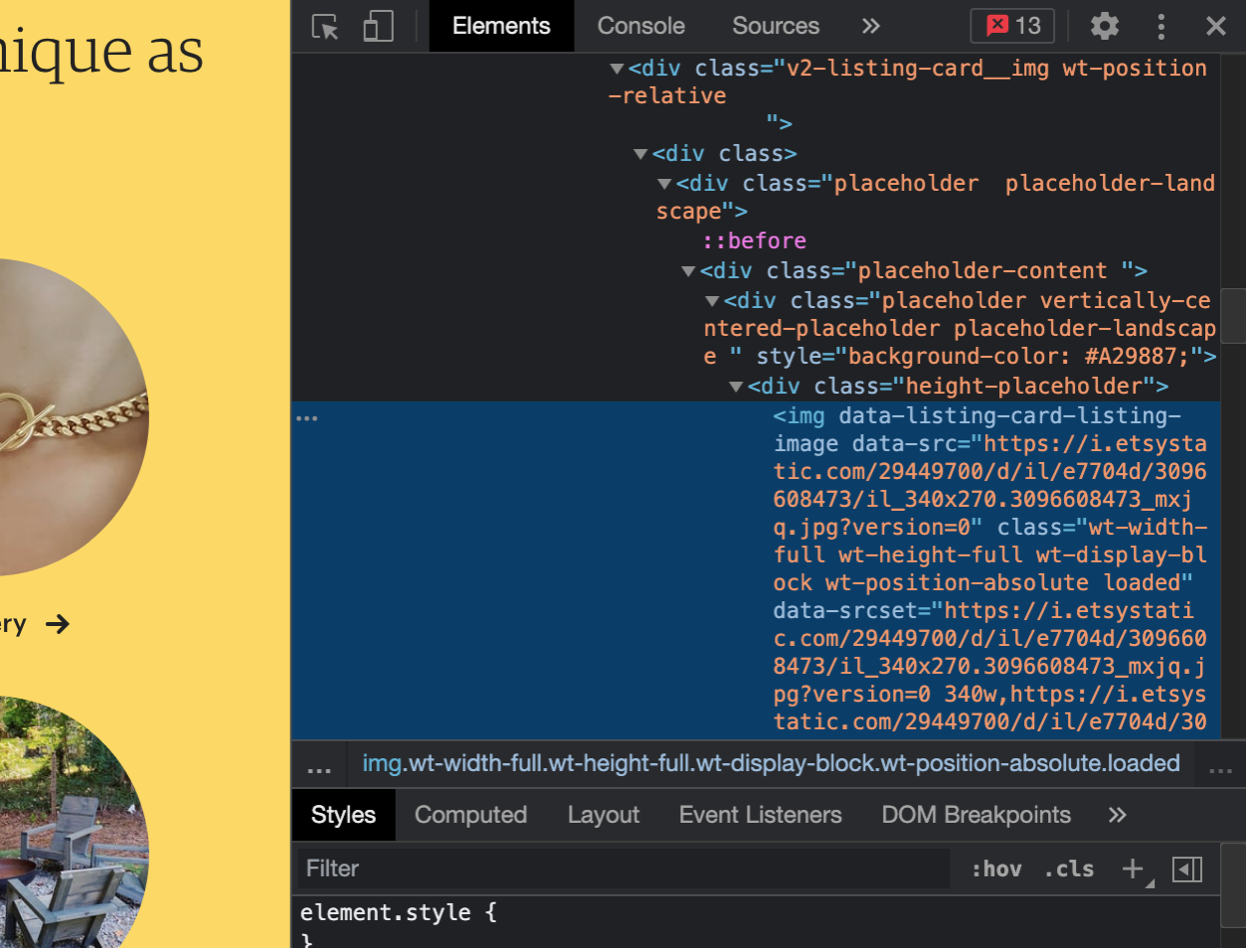

- Once you select that, a side dock will appear on the right side of the website, like this.

- Now with this, you can easily access any item on that website — yes, any at all. All you have to do is click on the item to inspect it.

Now we’re ready to start scraping, and there are lots of great libraries you can scrape with (BeautifulSoup, Selenium, Scrapy, etc). I’ll be using Beautiful Soup because it is really easy to use, but once you come to grips with web scraping, you can choose the library you feel most comfortable with.

I'll also be using Python for this project, so make sure you have Beautiful installed:

pip install beautifulsoup4After installing, we need to go ahead and import the necessary libraries.

import requests

from bs4 import BeautifulSoup

import pandas as pdSince there are lots of details to scrape from the website, I will only be scraping the product name, price, product image url and product url for each product. But don’t worry, you can use the same technique to scrape as many details as you want for each item.

Now we will define our headers ; it is really important to add headers to your scraping url because they allow access to blocked information on the website.

headers = {

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:66.0) Gecko/20100101 Firefox/66.0",

"Accept-Encoding":"gzip, deflate",

"Accept":"text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

"DNT":"1","Connection":"close", "Upgrade-Insecure-Requests":"1"

}Since we want users to specify the keyword to scrape and the number of pages, it is a good idea to put this in a function to allow reusability and flexibility.

def scrape(keyword_to_search:str, page_number:int):

titles = []

prices = []

item_url = []

images = []

print(f’scraping {page_number} pages in total')

for page in range(1, page_number+1):

print(f'scraping page {page}')

url = f'https://www.etsy.com/ca/search?q= {keyword_to_search}&page={i}&ref=pagination'

r = requests.get(url, headers=headers)

content = r.content

soup = BeautifulSoup(content,"html.parser")The requests.get(…) call reads the content on the page, and BeautifulSoup(…) makes the content readable and accessible in the html context.

for title in soup.find_all("h3", class_="wt-mb-xs-0"):

titles.append(title.text.strip())

for price in soup.find_all("span",class_="currency-value"):

prices.append(price.text)

links = [a["href"] for a in soup.find_all("a", href=True)]

for link in links:

if link.startswith("https://www.etsy.com/ca/listing/"):

item_url.append(link)

for image in soup.find_all("img"):

images.append(image.get('src'))Ok, so now we have details for each item. If you are inspecting the Etsy website with me, you'll see that all the details specified above can be extracted from those tags. This is true at the time of writing this article, but it’s important to remember that website owners often restructure their websites periodically. But all you have to do in that case is replace it with the correct tags in the code above.

We have all the details saved in the list, and we can now write them into a dictionary. This will give us a dictionary of lists, which we can then easily put in a Pandas DataFrame and close our function.

dict_of_scrapped_items = {

'title':titles,

'price(CAD)':prices,

'item_url':item_url,

'image_url':images

}

df = pd.DataFrame.from_dict(dict_of_scrapped_items, orient='index')

df = df.transpose()

df['category'] = keyword_to_search

return dfAnd that’s it! We have our data saved in a Pandas DataFrame and a simple df.head(), will show you the head of your data.

Now you can use the dataset for your analysis and for further modelling.

Some unusual situations that might be encountered while scraping and how to solve them include:

- A blocked IP address — this can be avoided by:

- Ensuring the website accepts scraping from the robots.txt terms and conditions

- Defining headers

- Adding time.sleep(); this reduces frequency of your request hitting the website

- Inconsistent data — some tags might be missing; this can be solved by:

- Adding a try-catch exception to handle such errors

- Filling empty entries with a value (i.e None, 0, etc.)

In conclusion, Web Scraping is very beneficial to data scientists; it allows you access to all the data on the web, which can then be used for analysis and insights. I hope you find it interesting as well.

Till my next article, let me know what you think too!